BY MOLLY BUCKLEY | October 3, 2025

This is the tenth and final installment in a blog series documenting EFF's findings from the Stop Censoring Abortion campaign. You can read additional posts here.

When we launched Stop Censoring Abortion, our goals were to understand how social media platforms were silencing abortion-related content, gather data and lift up stories of censorship, and hold social media companies accountable for the harm they have caused to the reproductive rights movement.

Thanks to nearly 100 submissions from educators, advocates, clinics, researchers, and individuals around the world, we confirmed what many already suspected: this speech is being removed, restricted, and silenced by platforms at an alarming rate. Together, our findings paint a clear picture of censorship in action: platforms’ moderation systems are not only broken, but are actively harming those seeking and sharing vital reproductive health information.

Here are the key lessons from this campaign: what we uncovered, how platforms can do better, and why pushing back against this censorship matters more now than ever.

Lessons Learned

Across our submissions, we saw systemic over-enforcement, vague and convoluted policies, arbitrary takedowns, sudden account bans, and ignored appeals. And in almost every case we reviewed, the posts and accounts in question did not violate any of the platform’s stated rules.

The most common reason Meta gave for removing abortion-related content was that it violated policies on Restricted Goods and Services, which prohibit any “attempts to buy, sell, trade, donate, gift or ask for pharmaceutical drugs.” But most of the content submitted simply provided factual, educational information that clearly did not violate those rules. As we saw in the M+A Hotline’s case, this kind of misclassification deprives patients, advocates, and researchers of reliable information, and chills those trying to provide accurate and life-saving reproductive health resources.

In one submission, we even saw posts sharing educational abortion resources get flagged under the “Dangerous Organizations and Individuals” policy, a rule intended to prevent terrorism and criminal activity. We’ve seen this policy cause problems in the past, but in the reproductive health space, treating legal and accurate information as violent or unlawful only adds needless stigma and confusion.

Meta’s convoluted advertising policies add another layer of harm. There are specific, additional rules users must navigate to post paid content about abortion. While many of these rules still contain exceptions for purely educational content, Meta is vague about how and when those exceptions apply. And ads that seem like they should have been allowed were frequently flagged under rules about “prescription drugs” or “social issues.” This patchwork of unclear policies forces users to second-guess what content they can post or promote for fear of losing access to their networks.

In another troubling trend, many of our submitters reported experiencing shadowbanning and de-ranking, where posts weren’t removed but were instead quietly suppressed by the algorithm. This kind of suppression leaves advocates without any notice, explanation, or recourse—and severely limits their ability to reach people who need the information most.

Many users also faced sudden account bans without warning or clear justification. Though Meta’s policies dictate that an account should only be disabled or removed after “repeated” violations, organizations like Women Help Women received no warning before seeing their critical connections cut off overnight.

Finally, we learned that Meta’s enforcement outcomes were deeply inconsistent. Users often had their appeals denied and accounts suspended until someone with insider access to Meta could intervene. For example, the Red River’s Women’s Clinic, RISE at Emory, and Aid Access each had their accounts restored only after press attention or personal contacts stepped in. This reliance on backchannels underscores the inequity in Meta’s moderation processes: without connections, users are left unfairly silenced.

It’s Not Just Meta

Most of our submissions detailed suppression that took place on one of Meta’s platforms (Facebook, Instagram, Whatsapp and Threads), so we decided to focus our analysis on Meta’s moderation policies and practices. But we should note that this problem is by no means confined to Meta.

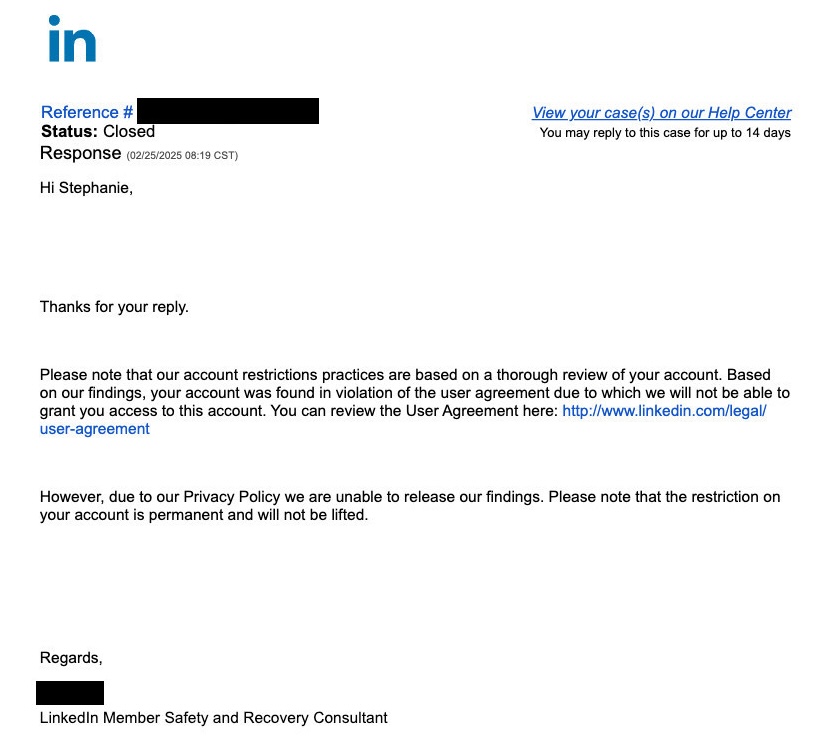

On LinkedIn, for example, Stephanie Tillman told us about how she had her entire account permanently taken down, with nothing more than a vague notice that she had violated LinkedIn’s User Agreement. When Stephanie reached out to ask what violation she committed, LinkedIn responded that “due to our Privacy Policy we are unable to release our findings,” leaving her with no clarity or recourse. Stephanie suspects that the ban was related to her work with Repro TLC, an advocacy and clinical health care organization, and/or her posts relating to her personal business, Feminist Midwife LLC. But LinkedIn’s opaque enforcement meant she had no way to confirm these suspicions, and no path to restoring her account.

Screenshot provided by Stephanie Tillman to EFF (with personal information redacted by EFF)

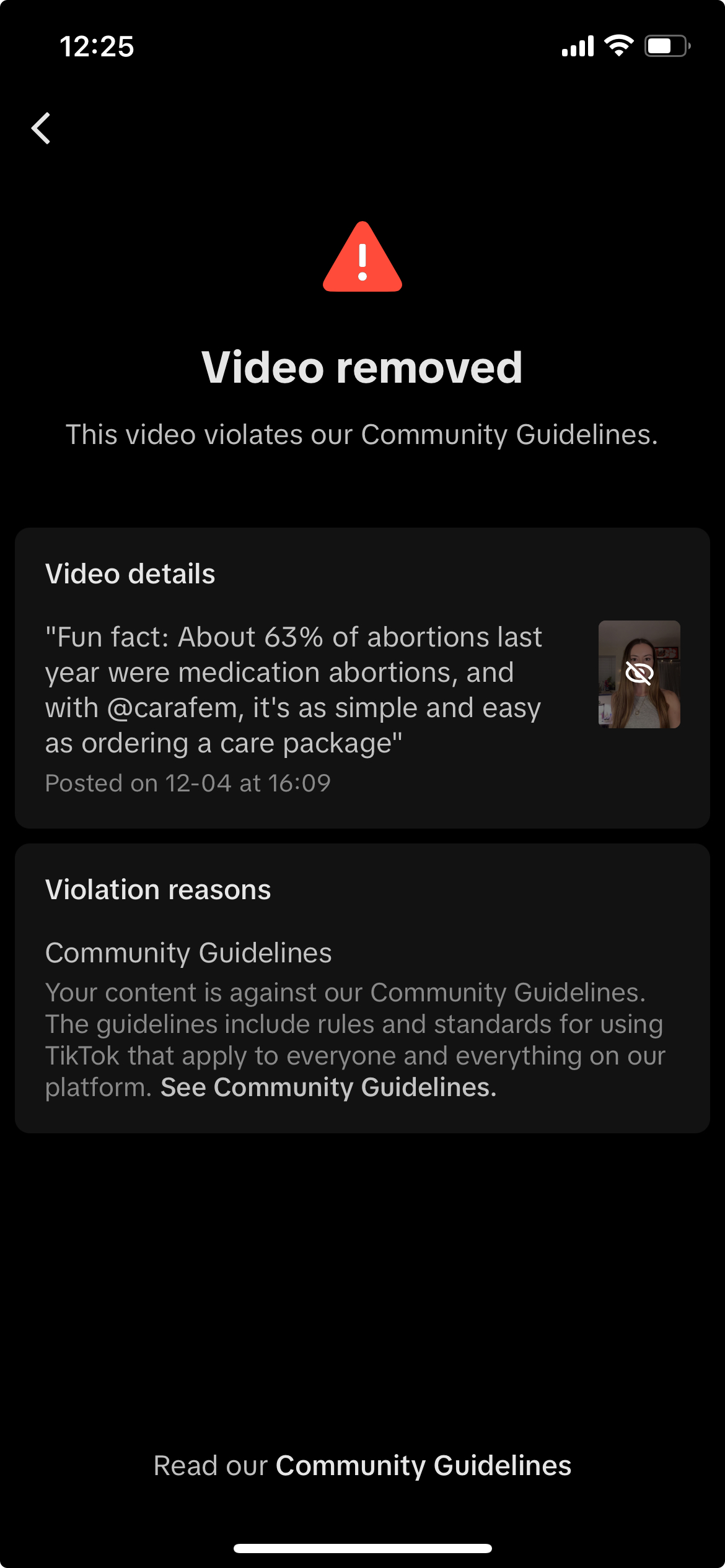

And over on Tiktok, Brenna Miller, a creator who works in health care and frequently posts about abortion, posted a video of her “unboxing” an abortion pill care package from Carafem. Though Brenna’s video was factual and straightforward, TikTok removed it, saying that she had violated TikTok’s Community Guidelines.

Screenshot provided by Brenna Miller to EFF

Brenna appealed the removal successfully at first, but a few weeks later the video was permanently deleted—this time, without any explanation or chance to appeal again.

Brenna’s far from the only one experiencing censorship on TikTok. Even Jessica Valenti, award-winning writer, activist, and author of the Abortion Every Day newsletter, recently had a video taken down from TikTok for violating its community guidelines, with no further explanation. The video she posted was about the Trump administration calling IUDs and the Pill ‘abortifacients.’ Jessica wrote:

Which rule did I break? Well, they didn’t say: but I wasn’t trying to sell anything, the video didn’t feature nudity, and I didn’t publish any violence. By process of elimination, that means the video was likely taken down as "misinformation." Which is…ironic.

These are not isolated incidents. In the Center for Intimacy Justice’s survey of reproductive rights advocates, health organizations, sex educators, and businesses, 63% reported having content removed on Meta platforms, 55% reported the same on TikTok, and 66% reported having ads rejected from Google platforms (including YouTube). Clearly, censorship of abortion-related content is a systemic problem across platforms.

How Platforms Can Do Better on Abortion-Related Speech

Based on our findings, we're calling on platforms to take these concrete steps to improve moderation of abortion-related speech:

- Publish clear policies. Users should not have to guess whether their speech is allowed or not.

- Enforce rules consistently. If a post does not violate a written standard, it should not be removed.

- Provide real transparency. Enforcement decisions must come with clear, detailed explanations and meaningful opportunities to appeal.

- Guarantee functional appeals. Users must be able to challenge wrongful takedowns without relying on insider contacts.

- Expand human review. Reproductive rights is a nuanced issue and can be too complex to be left entirely to error-prone automated moderation systems.

Practical Tips for Users

Don’t get it twisted: Users should not have to worry about their posts being deleted or their accounts getting banned when they share factual information that doesn’t violate platform policies. The onus is on platforms to get it together and uphold their commitments to users. But while platforms continue to fail, we’ve provided some practical tips to reduce the risk of takedowns, including:

- Consider limiting commonly flagged words and images. Posts with pill images or certain keyword combinations (like “abortion,” “pill,” and “mail”) were often flagged.

- Be as clear as possible. Vague phrases like “we can help you get what you need” might look like drug sales to an algorithm.

- Be careful with links. Direct links to pill providers were often flagged. Spell out the links instead.

- Expect stricter rules for ads. Boosted posts face harsher scrutiny than regular posts.

- Appeal wrongful enforcement decisions. Requesting an appeal might get you a human moderator or, even better, review from Meta’s independent Oversight Board.

- Document everything and back up your content. Screenshot all communications and enforcement decisions so you can share them with the press or advocacy groups, and export your data regularly in case your account vanishes overnight.

Keep Fighting

Abortion information saves lives, and social media is the primary—and sometimes only—way for advocates and providers to get accurate information out to the masses. But now we have evidence that this censorship is widespread, unjustified, and harming communities who need access to this information most.

Platforms must be held accountable for these harms, and advocates must continue to speak out. The more we push back—through campaigns, reporting, policy advocacy, and user action—the harder it will be for platforms to look away.

So keep speaking out, and keep demanding accountability. Platforms need to know we're paying attention—and we won't stop fighting until everyone can share information about abortion freely, safely, and without fear of being silenced.

This is the tenth and final post in our blog series documenting the findings from our Stop Censoring Abortion campaign. Read more at https://www.eff.org/pages/stop-censoring-abortion.

Affected by unjust censorship? Share your story using the hashtag #StopCensoringAbortion. Amplify censored posts and accounts, share screenshots of removals and platform messages—together, we can demonstrate how these policies harm real people.