After last week’s violent attack on the Capitol, law enforcement is working overtime to identify the perpetrators. This is critical to accountability for the attempted insurrection. Law enforcement has many, many tools at their disposal to do this, especially given the very public nature of most of the organizing. But we object to one method reportedly being used to determine who was involved: law enforcement using facial recognition technologies to compare photos of unidentified individuals from the Capitol attack to databases of photos of known individuals. There are just too many risks and problems in this approach, both technically and legally, to justify its use.

Government use of facial recognition crosses a bright red line, and we should not normalize its use, even during a national tragedy.

EFF Opposes Government Use of Face Recognition

Make no mistake: the attack on the Capitol can and should be investigated by law enforcement. The attackers’ use of public social media to both prepare and document their actions will make the job easier than it otherwise might be.

But a ban on all government use of face recognition, including its use by law enforcement, remains a necessary precaution to protect us from this dangerous and easily misused technology. This includes a ban on government’s use of information obtained by other government actors and by third-party services through face recognition.

One such service is Clearview AI, which allows law enforcement officers to upload a photo of an unidentified person and, allegedly, get back publicly-posted photos of that person. Clearview has reportedly seen a huge increase in usage since the attack. Yet the faceprints in Clearview’s database were collected, without consent, from millions of unsuspecting users across the web, from places like Facebook, YouTube, and Venmo, along with links to where those photos were posted on the Internet. This means that police are comparing images of the rioters to those of many millions of individuals who were never involved—probably including yours.

EFF opposes law enforcement use of Clearview, and has filed an amicus brief against it in a suit brought by the ACLU. The suit correctly alleges the company’s faceprinting without consent violates the Illinois Biometric Information Privacy Act (BIPA).

Separately, police tracking down the Capitol attackers are likely using government-controlled databases, such as those maintained by state DMVs, for face recognition purposes. We also oppose this use of face recognition technology, which matches images collected during nearly universal practices like applying for a driver’s license. Most individuals require government-issued identification or a license but have no ability to opt out of such face surveillance.

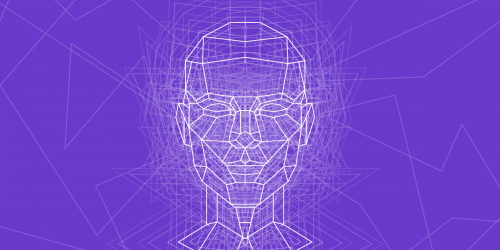

Face Recognition Impacts Everyone, Not Only Those Charged With Crimes

The number of people affected by government use of face recognition is staggering: from DMV databases alone, roughly two-thirds of the population of the U.S. is at risk of image surveillance and misidentification, with no choice to opt out. Further, Clearview has extracted faceprints from over 3 billion people. This is not a question of “what happens if face recognition is used against you?” It is a question of how many times law enforcement has already done so.

For many of the same reasons, EFF also opposes government identification of those at the Capitol by means of dragnet searches of cell phone records of everyone present. Such searches have many problems, from the fact that users are often not actually where records indicate they are, to this tactic’s history of falsely implicating innocent people. The Fourth Amendment was written specifically to prevent these kinds of overbroad searches.

Government Use of Facial Recognition Would Chill Protected Protest Activity

Facial surveillance technology allows police to track people not only after the fact but also in real time, including at lawful political protests. Police repeatedly used this same technology to arrest people who participated in last year’s Black Lives Matter protests. Its normalization and widespread use by the government would fundamentally change the society in which we live. It will, for example, chill and deter people from exercising their First Amendment-protected rights to speak, peacefully assemble, and associate with others.

Countless studies have shown that when people think the government is watching them, they alter their behavior to try to avoid scrutiny. And this burden historically falls disproportionately on communities of color, immigrants, religious minorities, and other marginalized groups.

Face surveillance technology is also prone to error and has already implicated multiple people for crimes they did not commit.

Government use of facial recognition crosses a bright red line, and we should not normalize its use, even during a national tragedy. In responding to this unprecedented event, we must thoughtfully consider not just the unexpected ramifications that any new legislation could have, but the hazards posed by surveillance techniques like facial recognition. This technology poses a profound threat to personal privacy, racial justice, political and religious expression, and the fundamental freedom to go about our lives without having our movements and associations covertly monitored and analyzed.