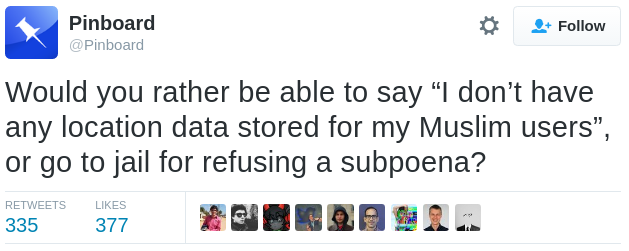

The results of the U.S. presidential election have put the tech industry in a risky position. President-Elect Trump has promised to deport millions of our friends and neighbors, track people based on their religious beliefs, and undermine users’ digital security and privacy. He’ll need Silicon Valley’s cooperation to do it—and Silicon Valley can fight back.

If Mr. Trump carries out these plans, they will likely be accompanied by unprecedented demands on tech companies to hand over private data on people who use their services. This includes the conversations, thoughts, experiences, locations, photos, and more that people have entrusted platforms and service providers with. Any of these might be turned against users under a hostile administration.

We present here a series of recommendations that go above and beyond the classic necessities of security (such as enabling two-factor authentication and encrypting data on disk). If a tech product might be co-opted to target a vulnerable population, now is the time to minimize the harm that can be done. To this end, we recommend technical service providers take the following steps to protect their users, as soon as possible:

1. Allow pseudonymous access.

Give your users the freedom to access your service pseudonymously. As we've previously written, real-name policies and their ilk are especially harmful to vulnerable populations, including pro-democracy activists and the LGBT community. For bonus points, don't restrict access to logged-in users.

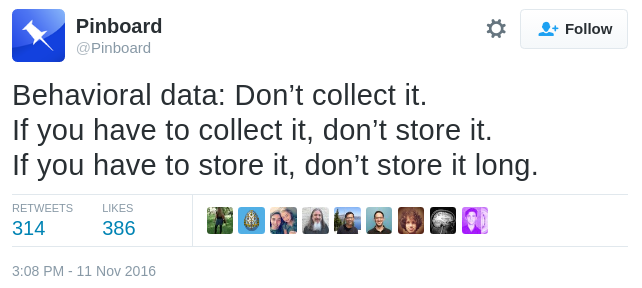

2. Stop behavioral analysis.

Do not attempt to use your data to make decisions about user preferences and characteristics—like political preference or sexual orientation—that users did not explicitly specify themselves. If you do any sort of behavioral tracking, whether using your service or across others, let users opt out. This means letting users modify data that's been collected about them so far, and giving them the option to not have your service collect this information about them at all.

When you expose inferences to users, allow them both to remove or edit individual inferences and to opt out entirely. If your algorithms make a mistake or mislabel a person, the user should be able to correct you. Furthermore, ensure that the internal systems mirror and respect these preferences. When users opt out, delete their data and stop collecting it moving forward. Offering an opt out of targeting but not out of tracking is unacceptable.

3. Free up disk space and delete those logs.

Now is the time to clean up the logs. If you need them to check for abuse or for debugging, think carefully about which precise pieces of data you really need. And then delete them regularly—say, every week for the most sensitive data. IP addresses are especially risky to keep. Avoid logging them, or if you must log them for anti-abuse or statistics, do so in separate files that you can aggregate and delete frequently. Reject user-hostile measures like browser fingerprinting.

4. Encrypt data in transit.

Seriously, encrypt data in transit. Why are you not already encrypting data in transit? Does the ISP and the entire internet need to know about the information your users are reading, the things they're buying, the places they're going? It's 2016. Turn on HTTPS by default.

5. Enable end-to-end encryption by default.

If your service includes messages, enable end-to-end encryption by default. Are you offering a high-value service—like AI-powered recommendations or search—that doesn’t work on encrypted data? Well, the benefits of encrypted data have just spiked, as has popular demand for it. Now is the time to re-evaluate that tradeoff. If it must be off by default, offering an end-to-end encrypted mode is not enough. You must give users the option to turn on end-to-end encryption universally within the application, thus avoiding the dangerous risk of accidentally sending messages unencrypted.