A few years ago, when you saw a security camera, you may have thought that the video feed went to a VCR somewhere in a back office that could only be accessed when a crime occurs. Or maybe you imagined a sleepy guard who only paid half-attention, and only when they discovered a crime in progress. In the age of internet-connectivity, now it’s easy to imagine footage sitting on a server somewhere, with any image inaccessible except to someone willing to fast forward through hundreds of hours of footage.

That may be how it worked in 1990s heist movies, and it may be how a homeowner still sorts through their own home security camera footage. But that's not how cameras operate in today's security environment. Instead, advanced algorithms are watching every frame on every camera and documenting every person, animal, vehicle, and backpack as they move through physical space, and thus camera to camera, over an extended period of time.

The term "video analytics" seems boring, but don't confuse it with how many views you got on your YouTube “how to poach an egg” tutorial. In a law enforcement or private security context, video analytics refers to using machine learning, artificial intelligence, and computer vision to automate ubiquitous surveillance.

Through the Atlas of Surveillance project, EFF has found more than 35 law enforcement agencies that use advanced video analytics technology. That number is steadily growing as we discover new vendors, contracts, and capabilities. To better understand how this software works, who uses it, and what it’s capable of, EFF has acquired a number of user manuals. And yes, they are even scarier than we thought.

Briefcam, which is often packaged with Genetec video technology, is frequently used at real-time crime centers. These are police surveillance facilities that aggregate camera footage and other surveillance information from across a jurisdiction. Dozens of police departments use Briefcam to search through hours of footage from multiple cameras in order to, for instance, narrow in on a particular face or a specific colored backpack. This power of video analytic software would be particularly scary if used to identify people out practicing their First Amendment right to protest.

Avigilon systems are a bit more opaque, since they are often sold to business, which aren't subject to the same transparency laws. In San Francisco, for instance, Avigilon provides the cameras and software for at least six business improvement districts (BIDs) and Community Benefit Districts (CBDs). These districts blanket neighborhoods in surveillance cameras and relay the footage back to a central control room. Avigilon’s video analytics can undertake object identification (such as whether things are cars and people), license plate reading, and potentially face recognition.

You can read the Avigilon user manual here, and the Briefcam manual here. The latter was obtained through the California Public Records Act by Dylan Kubeny, a student journalist at the University of Nevada, Reno Reynolds School of Journalism.

But what exactly are these software systems' capabilities? Here’s what we learned:

Pick a Face, Track a Face, Rate a Face

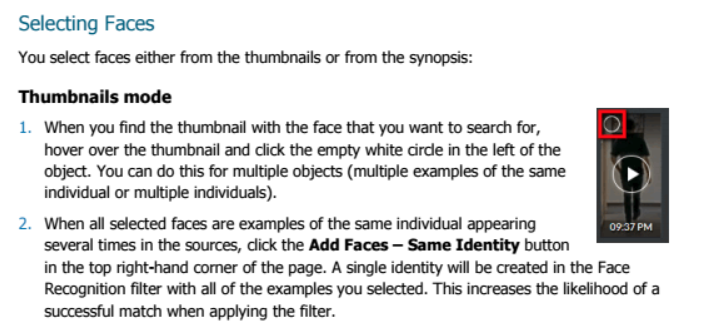

If you're watching video footage on Briefcam, you can select any face, then add it to a "watchlist." Then, with a few more clicks, you can retrieve every piece of video you have with that person's face in it.

Briefcam assigns all face images 1-3 stars. One star: the AI can't even recognize it as a person. Two stars: medium confidence. Three stars: high confidence.

Detection of Unusual Events

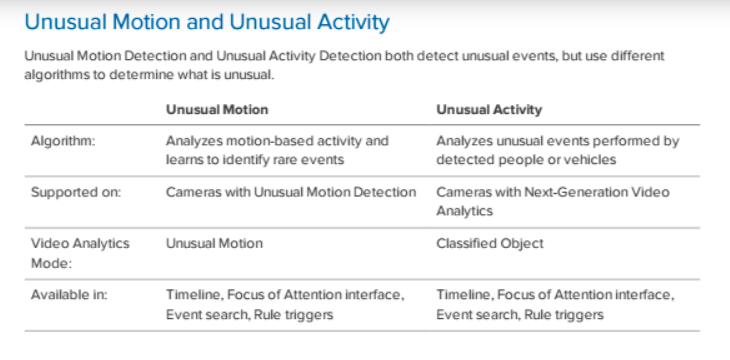

Avigilon has a pair of algorithms that it uses to predict what it calls "unusual events."

The first can detect "unusual motions," essentially patterns of pixels that don't match what you'd normally expect in the scene. It takes two weeks to train this self-learning algorithm. The second can detect "unusual activity" involving cars and people. It only takes a week to train.

Also, there's "Tampering Detection" which, depending on how you set it, can be triggered by a moving shadow:

Enter a value between 1-10 to select how sensitive a camera is to tampering Events. Tampering is a sudden change in the camera field of view, usually caused by someone unexpectedly moving the camera. Lower the setting if small changes in the scene, like moving shadows, cause tampering events. If the camera is installed indoors and the scene is unlikely to change, you can increase the setting to capture more unusual events.

Pink Hair and Short Sleeves

With Briefcam’s shade filter, a person searching a crowd could filter by the color and length of items of clothing, accessories, or even hair. Briefcam’s manual even states the program can search a crowd or a large collection of footage for someone with pink hair.

In addition, users of BriefCam can search specifically by what a person is wearing and other “personal attributes.” Law enforcement attempting to sift through crowd footage or hours of video could search for someone by specifying blue jeans or a yellow short-sleeved shirt.

Man, Woman, Child, Animal

BriefCam sorts people and objects into specific categories to make them easier for the system to search for. BriefCam breaks people into the three categories of “man,” “woman,” and “child.” Scientific studies show that this type of categorization can misidentify gender nonconforming, nonbinary, trans, and disabled people whose bodies may not conform to the rigid criteria the software looks for when sorting people. Such misidentification can have real-world harms, like triggering misguided investigations or denying access.

The software also breaks down other categories, including distinguishing between different types of vehicles and recognizing animals.

Proximity Alert

In addition to monitoring the total number of objects in a frame or the relative size of objects, BriefCam can detect proximity between people and the duration of their contact. This might make BriefCam a prime candidate for “COVID-19 washing,” or rebranding invasive surveillance technology as a potential solution to the current public health crisis.

Avigilon also claims it can detect skin temperature, raising another possible assertion of public health benefit. But, as we’ve argued before, remote thermal imaging can often be very inaccurate, and fail to detect virus carriers that are asymptomatic.

Public health is a collective effort. Deploying invasive surveillance technologies that could easily be used to monitor protestors and track political figures is likely to breed more distrust of the government. This will make public health collaboration less likely, not more.

Watchlists

One feature available both with Briefcam and Avigilon are watchlists, and we don't mean a notebook full of names. Instead, the systems allow you to upload folders of faces and spreadsheets of license plates, and then the algorithm will find matches and track the targets’ movement. The underlying watchlists can be extremely problematic. For example, EFF has looked at hundreds of policy documents for automated license plate readers (ALPRs) and it is very rare for an agency to describe the rules for adding someone to a watchlist.

Vehicles Worldwide

Often, ALPRs are associated with England, the birthplace of the technology, and the United States, where it has metastasized. But Avigilon already has its sights set on new markets and has programmed its technology to identify license plates across six continents.

Often, ALPRs are associated with England, the birthplace of the technology, and the United States, where it has metastasized. But Avigilon already has its sights set on new markets and has programmed its technology to identify license plates across six continents.

It's worth noting that Avigilon is owned by Motorola Solutions, the same company that operates the infamous ALPR provider Vigilant Solutions.

Conclusion

We’re heading into a dangerous time. The lack of oversight of police acquisition and use of surveillance technology has dangerous consequences for those misidentified or caught up in the self-fulfilling prophecies of AI policing.

In fact, Dr. Rashall Brackney, the Charlottesville Police Chief, described these video analytics as perpetuating racial bias at a recent panel. Video analytics "are often incorrect," she said. "Over and over they create false positives in identifying suspects."

This new era of video analytics capabilities causes at least two problems. First, police could rely more and more on this secretive technology to dictate who to investigate and arrest by, for instance, identifying the wrong hooded and backpacked suspect. Second, people who attend political or religious gatherings will justifiably fear being identified, tracked, and punished.

Over a dozen cities across the United States have banned government use of face recognition, and that’s a great start. But this only goes so far. Surveillance companies are already planning ways to get around these bans by using other types of video analytic tools to identify people. Now is the time to push for more comprehensive legislation to defend our civil liberties and hold police accountable.

To learn more about Real-Time Crime Centers, read our latest report here.

Banner image source: Mesquite Police Department pricing proposal.