For governments interested in suppressing information online, the old methods of direct censorship are getting less and less effective.

Over the past month, the Thai government has made escalating attempts to suppress critical information online. In the last week, faced with an embarrassing video of the Thai King, the government ordered Facebook to geoblock over 300 pages on the platform and even threatened to shut Facebook down in the country. This is on top of last month's announcement that the government had banned any online interaction with three individuals: two academics and one journalist, all three of whom are political exiles and prominent critics of the state. And just today, law enforcement representatives described their efforts to target those who simply view—not even create or share—content critical of the monarchy and the government.

The Thai government has several methods at its own disposal to directly block large volumes of content. It could, as it has in the past, pressure ISPs to block websites. It could also hijack domain name queries, making sites harder to access. So why is it negotiating with Facebook instead of just blocking the offending pages itself? And what are Facebook’s responsibilities to users when this happens?

HTTPS and Mixed-Use Social Media Sites

The answer is, in part, HTTPS. When HTTPS encrypts your browsing, it doesn’t just protect the contents of the communication between your browser and the websites you visit. It also protects the specific pages on those sites, preventing censors from seeing and blocking anything “after the slash” in a URL. This means that if a sensitive video of the King shows up on a website, government censors can’t identify and block only the pages on which it appears. In an HTTPS world that makes such granularized censorship impossible, the government’s only direct censorship option is to block the site entirely.

That might still leave the government with tenable censorship options if critical speech and dissenting activity only happened on certain sites, like devoted blogs or message boards. A government could try to get away with blocking such sites wholesale without disrupting users outside a certain targeted political sphere.

But all sorts of user-generated content—from calls to revolution to cat pictures—are converging on social media websites like Facebook, which members of every political party use and rely on. This brings us to the second part of the answer as to why the government can’t censor like it used to: mixed-use social media sites. When content is both HTTPS-encrypted and on a mixed-use social media site like Facebook, it can be too politically expensive to block the whole site. Instead, the only option left is pressuring Facebook to do targeted blocking at the government’s request.

Government Requests for Social Media Censorship

Government requests for targeted blocking happen when something is compliant with Facebook’s community guidelines, but not with a country’s domestic law. This comes to a head when social media platforms have large user bases in repressive, censorious states—a dynamic that certainly applies in Thailand, where a military dictatorship shares its capital city with a dense population of Facebook power-users and one of the most Instagrammed locations on earth.

In Thailand, the video of the King in question violated the country’s overbroad lese majeste defamation laws against in any way insulting or criticizing the monarchy. So the Thai government requested that Facebook remove it—along with hundreds of other pieces of content—on legal grounds, and made an ultimately empty threat to shut down the platform in Thailand if Facebook did not comply.

Facebook did comply and geoblock over 100 URLs for which it received warrants from the Thai government. This may not be surprising; although the government is likely not going to block Facebook entirely, they still have other ways to go after the company, including threatening any in-country staff. Indeed, Facebook put itself in a vulnerable position when it inexplicably opened a Bangkok office during high political tensions after the 2014 military coup.

Platforms’ Responsibility to Users

If companies like Facebook do comply with government demands to remove content, these decisions must be transparent to their users and the general public. Otherwise, Facebook's compliance transforms its role from a victim of censorship, to a company pressured to act as a government censor. The stakes are high, especially in unstable political environments like Thailand. There, the targets of takedown requests can often be journalists, activists, and dissidents, and requests to take down their content or block their pages often serve as an ominous prelude to further action or targeting.

With that in mind, Facebook and other companies responding to government requests must provide the fullest legally permissible notice to users whenever possible. This means timely, informative notifications, on the record, that give users information like what branch of government requested to take down their content, on what legal grounds, and when the request was made.

Facebook seems to be getting better at this, at least in Thailand. When journalist Andrew MacGregor Marshall had content of his geoblocked in January, he did not receive consistent notice. Worse, the page that his readers in Thailand saw when they tried to access his post implied that the block was an error, not a deliberate act of government-mandated removal.

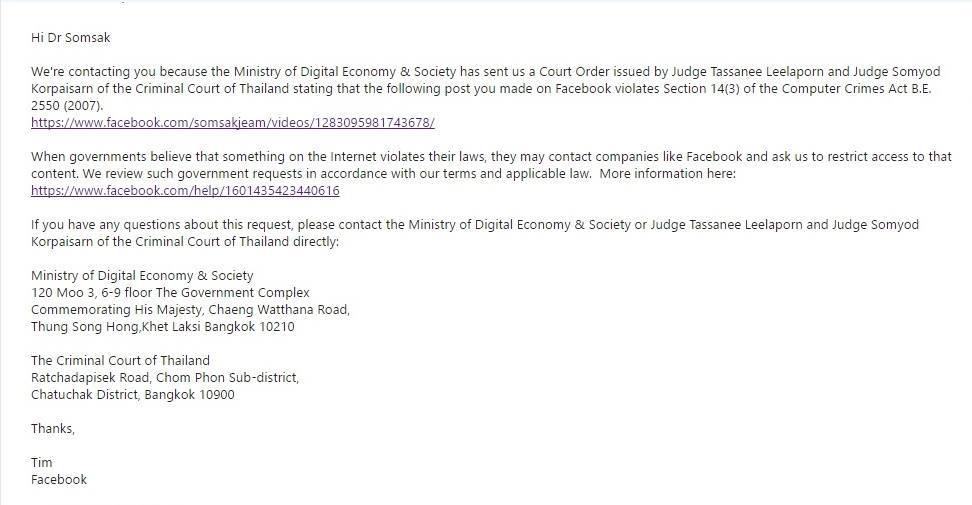

More recently, however, we have been happy to see evidence of Facebook providing more detailed notices to users, like this notice that exiled dissident Dr. Somsak Jeamteerasakul received and then shared online:

In an ideal world, timely and informative user notice can help power the Streisand effect: that is, the dynamic in which attempts to suppress information actually backfire and draw more attention to it than ever before. (And that’s certainly what’s happening with the video of the King, which has garnered countless international media headlines.) With details, users are in a better position to appeal to Facebook directly as well as draw public attention to government targeting and censorship, ultimately making this kind of censorship a self-defeating exercise for the government.

In an HTTP environment where governments can passively spy on and filter Internet content, individual pages could disappear behind obscure and misleading error messages. Moving to an increasingly HTTPS-secured world means that if social media companies are transparent about the pressure they face, we may gain some visibility into government censorship. However, if they comply without informing creators or readers of blocked content, we could find ourselves in a much worse situation. Without transparency, tech giants could misuse their power not only to silence vulnerable speakers, but also to obscure how that censorship takes place—and who demanded it.

Have you had your content or account removed from a social media platform? At EFF, we’ve been shining a light on the expanse and breadth of content removal on social media platforms with OnlineCensorship.org, where we and our partners at Visualising Impact collect your stories about content and account deletions. Share your story here.